During the past few months, Snowflake has been busy introducing new features aimed at streamlining DevOps: between Git integration, Snowflake CLI, CREATE OR ALTER and EXECUTE IMMEDIATE FROM (with Jinja support), it is now really easy to create CI/CD pipelines for database change management without the need for dedicated tools like schemachange or Terraform.

If you haven’t already, I encourage you to take a look to Snowflakes own quick start guide to see how it all plays together.

However, if you are already planning to implement all this in a real life project, you might want to take things a bit further and add a few extra steps to the quick start guide to make things more production ready.

If you want to follow along (or just skip to the end result), the code used to write this post is available on Github at Drake-Analytics/snowflake-devops-demo.

Deploy changed files only

The quick start guide relies on a number of ”deployment scripts” which call EXECUTE IMMEDIATE FROM on the objects that need to be deployed.

This allows fine control over what gets deployed and in which order, but it also means that everything will get redeployed at each run (unless someone manually edits the change scripts).

If your project is small, this might not be a problem (most queries would result in no changes and just execute pretty fast), but as your database grows to contains hundreds of objects, processing everything might take a while.

A better approach would be to find out which files have been changed, and only redeploy those.

Luckily for us, git itself is pretty good at tracking changes: if you are on Github, the Changed Files action can do just that.

On other platforms, this might be a bit more complicated, but a combination of git commands and/or REST APIs should be able to get you there.

This still leaves us with a problem: if we have several changed files, how do we know in which order they should be deployed?

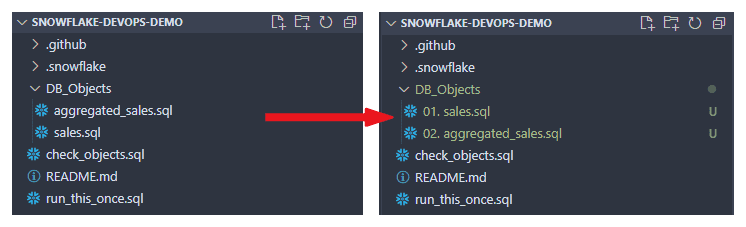

The easiest way is to just add a prefix to the file name, so that table 01. Sales.sql gets deployed before view 02. Aggregate_Sales.sql (which depends on 01. Sales.sql).

Another approach is to sort the changed files by the time they were committed: it still requires some thought from the developer (making smaller commits and being intentional about their order) but it doesn’t dictate any file naming or folder structure.

After all, committing things in same order in which we want them to be deployed feels kind of natural anyway.

Deployment order can be implicitly defined by commit time, or explicitly by file name.

Also, don’t name your tables ”aggregated_sales”: it’s a terrible name and I just picked it because it starts with A 😄.

At this point, our Github action should have two steps that look like this:

- name: Get changed files

id: changed-files

uses: tj-actions/changed-files@v44

with:

files: |

DB_Objects/*.sql

- name: Deploy changed files

env:

ALL_CHANGED_FILES: ${{ steps.changed-files.outputs.all_changed_files }}

ALL_CHANGED_FILES_COUNT: ${{ steps.changed-files.outputs.all_changed_files_count }}

run: |

sorted_files=$(for file in ${ALL_CHANGED_FILES}; do

echo "$(git log -1 --date=iso-strict-local --format="%ai" -- $file); $file"$'\n';

done | sort | cut -d ';' -f 2)

snow git fetch "${REPO}"

echo "Deploying ${ALL_CHANGED_FILES_COUNT} file(s) to ${ENV}"

for file in ${sorted_files}; do

query="EXECUTE IMMEDIATE FROM @${REPO}/branches/${BRANCH}/$file;"

echo ${query} | snow sql --stdin --database "DEVOPSDEMO_${ENV}"

doneNow that we know which files have been modified, we can get rid of the deployment scripts and set up our Github action to just iterate through the changed files (in the right order) and deploy them with the Snowflake CLI.

With our new setup, as soon as we edit or add an .sql file in the DB_Objects folder and push the changes, the action should execute the scripts and CREATE OR ALTER the objects into the appropriate Snowflake database.

Note that removing a file will not trigger any changes to the database: this is to prevent accidentally dropping important tables by deleting files or moving them out of the DB_Objects folder.

We can still drop objects, but we need to be explicit about it and push a file with the right DROP statement.

Connect database objects with their definition files

If you were careful reading the paragraph above, you might have noticed the conditional in should execute the changed scripts: in reality, things might go sideways for a lot of different reasons, and we might end up with a git repository that doesn’t completely reflect the database current state. This might be perfectly fine in some cases, but it’s at least something that we want to keep under control.

Currently, Snowflake does not (yet?) have any easy way to compare an object to a target state or to keep track of how an object was deployed, but if we get a little crafty, we can come up with our own system to keep track of things: let’s take advantage of the Jinja capabilities of the EXECUTE IMMEDIATE FROM command and add a Jinja-parameterized comment to our objects like this:

CREATE OR ALTER TABLE sales.sales (

sale_id NUMBER,

customer_id NUMBER,

product_id NUMBER,

sale_date DATE,

sale_amount DECIMAL(18, 2)

)

-- Jinja magic here ↓

COMMENT='{{deployment_info}}';Note: this approach is suitable for all Snowflake accounts, including those in Standard edition, but customers on Enterprise edition or higher might have cleaner options that don’t ”abuse” the comment field: contact us if you want to take a closer look!

Now, let’s gather some useful information about the deployment, like:

- Which file we are deploying

- The git hash of the file

- Which commit triggered the deployment

We can fetch all this from the action’s context or with a few git commands, format them into a nice JSON format, and then feed it into the EXECUTE IMMEDIATE FROM command.

The final action should now look like this:

name: Deploy changed files

on:

push:

branches:

- main

- dev

jobs:

deploy:

runs-on: ubuntu-latest

name: Deploy

env:

SNOWFLAKE_ACCOUNT: ${{ secrets.SNOWFLAKE_ACCOUNT }}

SNOWFLAKE_USER: ${{ secrets.SNOWFLAKE_USER }}

SNOWFLAKE_PASSWORD: ${{ secrets.SNOWFLAKE_PASSWORD }}

REPO: DEVOPSDEMO_COMMON.PUBLIC.DEVOPSDEMO

BRANCH: ${{ github.ref_name }}

ENV: ${{ (github.ref_name == 'main' && 'PROD') || 'DEV' }}

COMMIT_SHA: ${{ github.sha }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- name: Setup Snowflake CLI

uses: Snowflake-Labs/snowflake-cli-action@v1

with:

cli-version: "latest"

default-config-file-path: ".snowflake/config.toml"

- name: Get changed files

id: changed-files

uses: tj-actions/changed-files@v44

with:

files: |

DB_Objects/*.sql

- name: Deploy changed files

env:

ALL_CHANGED_FILES: ${{ steps.changed-files.outputs.all_changed_files }}

ALL_CHANGED_FILES_COUNT: ${{ steps.changed-files.outputs.all_changed_files_count }}

run: |

sorted_files=$(for file in ${ALL_CHANGED_FILES}; do

echo "$(git log -1 --date=iso-strict-local --format="%ai" -- $file); $file"$'\n';

done | sort | cut -d ';' -f 2)

snow git fetch "${REPO}"

echo "Deploying ${ALL_CHANGED_FILES_COUNT} file(s) to ${ENV}"

for file in ${sorted_files}; do

echo $file

file_sha=$(git ls-files -s "$file" | cut -d ' ' -f 2)

deployment_info="{\"deployment_info\": {\"file\": \"${REPO}/branches/${BRANCH}/$file\", \"commit_sha1\": \"${COMMIT_SHA}\", \"file_sha1\": \"${file_sha}\"}}"

query="EXECUTE IMMEDIATE FROM @${REPO}/branches/${BRANCH}/$file USING (deployment_info => '${deployment_info}');"

echo ${query} | snow sql --stdin --database "DEVOPSDEMO_${ENV}"

doneFrom now on, as long as we remember to add the COMMENT='{{deployment_info}}' bit, every object that we deploy using the action will contain metadata pointing to a file (in a specific version) that we used for deployment.

Now, if we want to make sure that every table in the PROD database matches a file in the main branch of the repo, we can just ask Snowflake like this:

LS @DEVOPSDEMO_COMMON.PUBLIC.DEVOPSDEMO/branches/main;

CREATE OR REPLACE TEMPORARY TABLE REPO AS SELECT * FROM TABLE(RESULT_SCAN(LAST_QUERY_ID())) WHERE "name" like '%DB_Objects%';

SELECT

TABLE_SCHEMA,

TABLE_NAME,

PARSE_JSON(COMMENT):"deployment_info":"file" as FILE,

PARSE_JSON(COMMENT):"deployment_info":"commit_sha1" as COMMIT_SHA,

PARSE_JSON(COMMENT):"deployment_info":"file_sha1" as FILE_SHA,

IFF(REPO."sha1" IS NULL, 'Not ok!', 'Ok') as MATCHES_REPO

FROM DEVOPSDEMO_PROD.INFORMATION_SCHEMA.TABLES

LEFT JOIN REPO ON FILE_SHA = REPO."sha1"

WHERE

TABLE_CATALOG = 'DEVOPSDEMO_PROD'

AND

TABLE_SCHEMA != 'INFORMATION_SCHEMA'

;This will return a list of all tables in the DEVOPSDEMO_PROD database and flag them as ”Ok” or ”Not ok” based on whether or not a file with the same hash can be found in the repository.

Similar queries can be written to check the other way around (do we have files in the repo that do not match any table?) or to include other object types (most views in the INFORMATION_SCHEMA contain the COMMENT column).

Conclusion

With the recent rollouts, Snowflake has made life a whole lot easier for developers: most of the new features are still in public preview, but we like how they allow us to keep everything in the database under version control with minimal hassle and no additional tools (other than a git platform).

We look forward to see what other DevOps features Snowflake has on the way!